| Crazy for History Sam Wineburg In 1917, the year the United States went to war, history erupted onto the pages of the American Psychological Association's Journal of Educational Psychology. J. Carleton Bell, the journal's managing editor and a professor at the Brooklyn Training School for Teachers, began his tenure with an editorial entitled "The Historic Sense." (A companion editorial examined the relation of psychology to military problems.) Bell claimed that the study of history provided an opportunity for thinking and reflection, qualities lacking in many classrooms. 1 Bell invited his readers to ponder two questions: "What is the historic sense?" and "How can it be developed?" Such questions, he asserted, did not concern only the history teacher; they were ones "in which the educational psychologist is interested, and which it is incumbent upon him to attempt to answer." To readers who wondered where to locate the elusive "historic sense," Bell offered clues. Presented with a set of primary documents, one student produces a coherent account while another assembles "a hodgepodge of miscellaneous facts." Similarly, some college freshmen "show great skill in the orderly arrangement of their historical data" while others "take all statements with equal emphasis . . . and become hopelessly confused in the multiplicity of details." Did such findings reflect "native differences in historic ability" or were they the "effects of specific courses of training"? Such questions opened "a fascinating field for investigation" for the educational psychologist.2 Bell 's questions still nag us today. What is the essence of historical understanding? How can historical interpretation and analysis be taught? What is the role of instruction in improving students' ability to think? In light of his foresight, it is instructive to examine how Bell carried out his research agenda. In a companion article to his editorial, Bell and his colleague David F. McCollum presented a study that began by laying out five aspects of the historic sense: 1. "The ability to understand present events in light of the past." 2. The ability to sift through the documentary record--newspaper articles, hearsay, partisan attacks, contemporary accounts--and construct "from this confused tangle a straightforward and probable account" of what happened. 3. The ability to appreciate a historical narrative. 4. "Reflective and discriminating replies to ‘thought questions' on a given historical situation." 5. The ability to answer factual questions about historical personalities and events. 3 The authors conceded that the fifth aspect was "the narrowest, and in the estimation of some writers, the least important type of historical ability." Yet, they acknowledged, it was the "most readily tested." In a fateful move, Bell and McCollum elected the path of least resistance: of their five possibilities only one--the ability to answer factual questions--was chosen for study. While perhaps the first instance, this was not the last in which ease of measurement--not priority of subject matter under-standing--determined the shape and contour of a research program. 4 Bell and McCollum created the first large-scale test of factual knowledge in United States history and administered it to fifteen hundred Texas students in 1915-1916. They compiled a list of names (for example, Thomas Jefferson, John Burgoyne, Alexander Hamilton, Cyrus H. McCormick), dates (1492, 1776, 1861), and events (the Sherman Antitrust Act, the Fugitive Slave Act, the Dred Scott decision) that history teachers said every student should know. They administered their test at the upper elementary level (fifth through seventh grades), in high schools (in five Texas districts: Houston , Huntsville , Brenham, San Marcos , and Austin ), and in colleges (at the University of Texas , Austin , and at two teacher-training institutions, South-West Texas State Normal School and Sam Houston Normal Institute). Across the board, results disappointed. Students recognized 1492 but not 1776; they identified Thomas Jefferson but often confused him with Jefferson Davis; they uprooted the Articles of Confederation from the eighteenth century and plunked them down in the Confederacy; and they stared quizzically at 1846, the beginning of the U.S.-Mexico war, unaware of its place in Texas history. Nearly all students recognized Sam Houston as the father of the Texas republic but had him marching triumphantly into Mexico City , not vanquishing Antonio Lopez de Santa Anna at San Jacinto. The overall score at the elementary level was a dismal 16 percent. In high school, after a year of history instruction, students scored a shabby 33 percent, and in college, after a third exposure to history, scores barely approached the halfway mark (49 percent). The authors concluded that studying history in school led only to "a small, irregular increase in the scores with increasing academic age." Anticipating jeremiads by secretaries of education and op-ed columnists a half century later, Bell and McCollum indicted the educational system and its charges: "Surely a grade of 33 in 100 on the simplest and most obvious facts of American history is not a record in which any high school can take great pride." 5 By the next world war, hand-wringing about students' historical benightedness had moved from the back pages of the Journal of Educational Psychology to the front pages of the New York Times. "Ignorance of U.S. History Shown by College Freshmen," trumpeted the headline on April 4, 1943, a day when the main story reported that George Patton's troops had overrun those of Erwin Rommel at Al-Guettar. Providing support for the earlier claim made by the historian Allan Nevins that "young people are all too ignorant of American history," the survey showed that a scant 6 percent of the seven thousand college freshmen tested could identify the thirteen original colonies, while only 15 percent could place William McKinley as president during the Spanish-American War. Less than a quarter could name two contributions made by either Abraham Lincoln or Thomas Jefferson. Often, students were simply confused. Abraham Lincoln "emaciated the slaves" and, as first president, was father of the Constitution. One graduate of an eastern high school, responding to a question about the system of checks and balances, claimed that Congress "has the right to veto bills that the President wishes to be passed." According to students, the United States expanded territorially by purchasing Alaska from the Dutch, the Philippines from Great Britain, Louisiana from Sweden, and Hawaii from Norway. A Times editorial excoriated those "appallingly ignorant" youth. 6 The Times 's breast-beating resumed in time for the bicentennial celebration, when the newspaper commissioned a second test, with Bernard Bailyn of Harvard University leading the charge. With the aid of the Educational Testing Service (ETS), the Times surveyed nearly two thousand freshmen on 194 college campuses. On May 2, 1976, the results rained down on the bicentennial parade: "Times Test Shows Knowledge of American History Limited." Of the 42 multiple-choice questions on the test, students averaged an embarrassing 21 correct--a failing score of 50 percent. The low point for Bailyn was that more students believed that the Puritans guaranteed religious freedom (36 percent) than understood religious toleration as the result of rival denominations seeking to cancel out each others' advantage (34 percent). This "absolutely shocking" response rendered the voluble Bailyn speechless: "I don't know how to explain it." 7 Results from the 1987, 1994, and 2001 administrations of the National Assessment of Educational Progress ( NAEP , known informally as the "Nation's Report Card") have shown little deviation from earlier trends.8 In the wake of the 2001 test came the same stale headlines ("Kids Get 'Abysmal' Grade in History: High School Seniors Don't Know Basics," USA Today ); the same refrains of cultural decline ("a nation of historical nitwits," wagged the Greensboro [North Carolina] News and Record ); the same holier-than-thou indictments of today's youth ("dumb as rocks," hissed the Weekly Standard ); and the same boy-who-cried-wolf predictions of impending doom ("when the United States is at war and under terrorist threat," young people's lack of knowledge is particularly dangerous).9 Scores on the 2001 test, after a decade of the "standards movement," were virtually identical to their predecessors. Six in ten seniors "lack even a basic knowledge of American history," wrote the Washington Post, results that NAEP officials castigated as "awful," "unacceptable," and "abysmal." "The questions that stumped so many students," lamented Secretary of Education Rod Paige, "involve the most fundamental concepts of our democracy, our growth as a nation, and our role in the world." As for the efficacy of standards in the states that adopted them, the test yielded no differences between students of teachers who reported adhering to standards and those who did not. Remarked a befuddled Paige, "I don't have any explanation for that at all."10 To many commentators, what is at stake goes beyond whether today's teens can distinguish whether eastern bankers or western ranchers supported the gold standard.11 Pointing to the latest NAEP results, the Albert Shanker Institute, sponsored by the American Federation of Teachers, claimed in a blue-ribbon report, "Education for Democracy," that "something has gone awry. . . . We now have convincing evidence that our students are woefully ignorant of who we are as Americans," indifferent to "the common good," and "disconnected from American history."12 One wonders what evidence this committee "now" possesses that has not been gathering moss since 1917 when Bell and McCollum hand tallied fifteen hundred student surveys. Explanations of today's low scores disintegrate when applied to results from 1917-history's apex as a subject in the school curriculum.13 No one can accuse the Texas teachers of 1917 of teaching process over content or serving up a tepid social studies curriculum to bored students--the National Council for the Social Studies (founded in 1921) did not even exist. Instead of being poorly trained and laboring under harsh conditions with scant public support, the Texas pedagogues were among the most educated members of their communities and commanded wide respect. ("The high schools of Houston and Austin have the reputation of being very well administered and of having an exceptionally high grade of teachers," wrote Bell and McCollum--it is hard to imagine that sentence being written about today's schools.)14 Historical memory shows an especial plasticity when it turns to assessing young people's character and capability. The same Diane Ravitch, educational historian and member of the NAEP governing board, who in May 2002 expressed alarm that students "know so little about their nation's history" and possess "so little capacity to reflect on its meaning" did a one-eighty eleven months later when rallying Congress for funds in history education: Although it is customary for people of a certain age to complain about the inadequacies of the younger generation, such complaints ring hollow today. . . . What we have learned in these past few weeks is that this younger generation, as represented on the battlefields of Iraq , may well be our finest generation.15 The phrase "our finest generation" of course echoes the journalist Tom Brokaw's characterization of the men and women who fought in World War II as the "greatest generation." Those were the college students who in 1943 abandoned the safety of the quadrangle for the hazards of the beachhead. Yet only in our contemporary mirror do they look "great." Back then, grown-ups dismissed them as knuckleheads, even questioning their ability to fight. Writing in the New York Times Magazine in May 1942, a fretful Allan Nevins wondered whether a historically illiterate fighting force might be a national liability. "We cannot understand what we are fighting for unless we know how our principles developed." If "knowing our principles" means scoring well on objective tests, we might want to update that thesis.16 A sober look at a century of history testing provides no evidence for the "gradual disintegration of cultural memory" or a " growing historical ignorance." The only thing growing seems to be our amnesia of past ignorance. If anything, test results across the last century point to a peculiar American neurosis: each generation's obsession with testing its young only to discover--and rediscover--their "shameful" ignorance. The consistency of results across time casts doubt on a presumed golden age of fact retention. Appeals to it are more the stuff of national lore and wistful nostalgia for a time that never was than a claim that can be anchored in the documentary record.17 Assessing the Assessors The statistician Dale Whittington has shown that when results from the early part of the twentieth century are put side by side with those of recent tests, today's high school students do about as well as their parents, grandparents, and great-grandparents. That is remarkable when we compare today's near-universal enrollments with the elitist composition of the high school in the teens and early twenties. Young people's knowledge hovers with amazing consistency around the 40-50 percent mark-- despite radical changes in the demographics of test takers across the century.18 The world has turned upside down in the last one hundred years, but students' ignorance of history has marched stolidly in place. Given changes in the knowledge historians deem most important, coupled with changes in who sits for the tests, why have scores remained flat? Complex questions often require complex answers, but not here. Kids look dumb on history tests because the system conspires to make them look dumb. The system is rigged. As practiced by the big testing companies, modern psychometrics guarantees that test results will conform to a symmetrical bell curve. Since the thirties, the main tool used to create these perfectly shaped bells has been the multiple-choice test, composed of many items, each with its own stem and set of alternatives. One alternative is the correct (or "keyed") answer; the others (in testers' argot, "distracters") are false. In the early days of large-scale testing, the unabashed goal of the multiple-choice item was to rank students, rather than to determine if they had attained a particular level of knowledge. A good item created "spread" among students by maximizing their differences. A bad item, conversely, created little spread; nearly everyone got it right (or wrong). The best way to ensure that most students would land under the curve's bell was to include a few questions that only the best students got right, a few questions that most got right, and a majority of questions that between 40 and 60 percent got right. In such examinations (known as "norm-referenced" tests because individual scores are compared against nationally representative samples, or "norms"), items are extensively field-tested to see if they behave properly. The testers' language is revealing: A good item is of medium difficulty and has a high "discrimination index"; students with higher scores will tend to get it right, and students with lower scores will tend to get it wrong. Items that deviate from this profile are dropped. In other words, only the questions that array students in a neatly shaped bell curve make it to the final version of the test.19 When large-scale testing was introduced into American classrooms in the 1930s, it ran counter to teachers' notions of what constituted average, below average, and exemplary performance. Most teachers believed that a failing score should be below 75 percent, and that an average score should be about 85 percent, a grade of B. Testing companies knew there would be a culture clash, so they prepared materials to allay teachers' concerns. In 1936 the Cooperative Test Service of the American Council on Education, ETS 's forerunner, explained the new scoring system to teachers: many teachers feel that each and every question should measure something, which all or at least a majority of well taught students should know or be able to do. When applied to tests of the type represented by the Cooperative series, these notions are serious misconceptions. . . . Ideally, the test should be adjusted in difficulty [so] that the least able students will score near zero, the average student will make about half the possible score, and the best students will just fall short of a perfect score. . . . The immediate purpose of these tests is to show, for each individual, how he compares in understanding and ability to use what he has learned with other individuals.20 The normal curve's legacy accompanies us today in the tests near and dear to the hearts of American high school students: the SAT (Scholastic Assessment Test). No matter how intelligent a given cohort of young people may be, no matter what miracles the standards movement may perform, no matter how much we close the "achievement gap" between students of different races and classes, it is impossible for the majority of students to score 1600. If that happened, the normal curve would not be normal, and Lake Wobegon, where "all of the children are above average," would not be fictional.21 It is impossible to have a basketball league in which every team wins most of its games. Similarly with the normal curve: there can be no winners without losers.

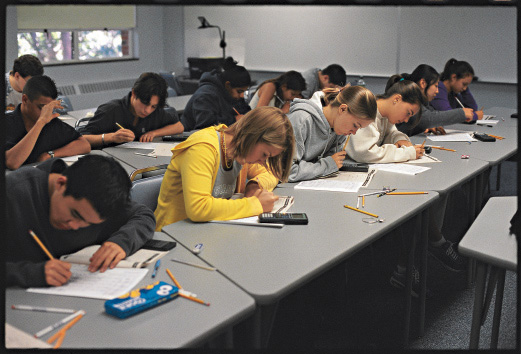

In spite of the odds against their scoring well, students at an SAT summer camp in Milton, Massachusetts, spend at least five hours a day studying and taking practice tests. Courtesy National Center for Public Policy and Higher Education. If all students get an item correct, they do not necessarily know the material; the item's distracters may be lousy. Therefore, when we examine the names and events included among the distracters in the 2001 NAEP examination--for example, the mutiny of British forces under General Howe, the Wobblies, the Morrill Land Grant Act, and the relation between the coinage of silver and an economic downturn--we must remember that those facts do not appear because of their inherent worth or because they were taught in the high school curriculum or even because a blue-ribbon commission declared that every American high school student should know them. Rather, General Howe, the Wobblies, the Morrill Land Grant Act, and countless other bits of information appear on the test because they "work" mathematically; they snare their targets in sufficient numbers to boost the item's "discrimination index." It is modern psychometrics in the driver's seat, not sound historical judgment.22 What would happen if the smart went down with the dumb, or, to put it more delicately, if students who knew the most history were stumped by items answered correctly by the less able? Again, the technology of testing makes sure that does not happen. Large-scale tests rely on a mathematical technique known as the biserial correlation in which each test item is linked mathematically to students' total scores and individual items that do not conform to the overall test pattern are eliminated from the final version.23 Imagine an item about the Crisis magazine, which W. E. B. Du Bois edited, that is answered correctly at higher rates by black students than by whites, while overall white students outscore blacks on the test by thirty points. The resulting correlation for the Du Bois item would be zero or negative, and its chances of survival would be slim--irrespective of whether historians thought the information was essential to test.24 Technically, such examinations as the history NAEP are "standards-based" (hypothetically, every student should be able to "reach standard") rather than fit to a predetermined curve. But the practices of item analysis, discrimination, biserial or item-test correlations, and spread are so ingrained in psychometric culture that for all intents and purposes, results from all large-scale objective tests fit the traditional bell curve.25 Indeed, this was confirmed by Steven Koffler, an administrator with the NAEP program that designed the 1987 history test, who reported that traditional item analysis and biserial correlations were used to create that supposedly standards-based test.26 What does all of this mean, practically? First, in addition to handicapping students who possess different historical knowledge from those in the mainstream, it means that no national test can allow students to show themselves historically literate. If ETS statisticians determined during pilot testing that most students could identify George Washington, "The Star-Spangled Banner," Rosa Parks, the dropping of the bomb on Hiroshima, slavery as a main cause of the Civil War, the purpose of Auschwitz, Babe Ruth, Harriet Tubman, the civil rights movement, the "I Have a Dream" speech, all those items would be eliminated from the test, for such questions fail to discriminate among students. So, when the next national assessment rolls around in 2010, do not hold your breath for the headline announcing, " U.S. schoolchildren score well on the 100 most basic facts of American history." The architecture of modern psychometrics ensures that will never happen--no matter how good a job we do in the classroom.27 Assessing the Future Whether or not students have known history in the past begs our present concerns. Shouldn't we be worried when two-thirds of seventeen-year-olds cannot date the Civil War or half cannot identify the Soviet Union as our ally in World War II? No one concerned with young people's development should remain passive in the face of such results. Any thinking person would insist that such knowledge is critical to informed citizenship. In this sense, E. D. Hirsch, an educational critic and proponent of "cultural literacy," is right when he claims that without a framework for understanding--the ability to identify key figures, major events, and chronological sequences--the world becomes unintelligible and reading a newspaper well-nigh impossible. So why is that many young people emerge from high school lacking this core knowledge?28 One narrative popular on both sides of the political aisle (as well as among historians who should know better) is that the social studies lobby and its agents warp young minds in ways reminiscent of a North Korean reeducation camp, wasting their time on mind-numbing "critical thinking" skills devoid of content. The problem with this armchair analysis is that, although we might find some support for it in the education school curriculum, the empirical data on what goes on in classrooms paint a different picture. Summarizing results from the 1987 national assessment, Diane Ravitch and Chester E. Finn concluded that in the typical social studies classroom, students listen to the teacher explain the day's lesson, use the textbook, and take tests. Occasionally they watch a movie. Sometimes they memorize information or read stories about events and people. They seldom work with other students, use original documents, write term papers, or discuss the significance of what they are studying.29 Similar conclusions emerged from a study of history and social studies instruction in Indiana in the early 1960s, as well as from John I. Goodlad's A Place Called School, the most extensive observational study of schooling in the twentieth century, involving twenty ethnographers in 1,350 classrooms observing 17,163 students. The high schools visited by Goodlad's team in 1977 all offered courses in American history and government. Yet, while teachers in those courses overwhelmingly claimed that their goals fit snugly with "inquiry methods" and "hands-on learning," their tests told a different story, requiring little more than the recall of names and dates and memorized information. The topics of the history curriculum are of "great human interest," wrote Goodlad, but "something strange seems to have happened to them on their way to the classroom." History becomes removed from its "intrinsically human character, reduced to the dates and places readers will recall memorizing for tests."30 Even at the height of the "new social studies" in the late 1960s and early 1970s, those who ventured into classrooms saw something different from the image conveyed by then-fashionable teachers' magazines. In the history classrooms Charles Silberman visited in the late 1960s, the great bulk of students' time was "devoted to detail, most of it trivial, much of it factually incorrect, and almost all of it unrelated to any concept, structure, cognitive strategy, or indeed anything other than the lesson plan." In a 1994 national survey of about fifteen hundred Americans conducted by Indiana University's Center for Survey Research (under the direction of Roy Rosenzweig and David Thelen), adults were asked to "pick one word or phrase to describe your experience with history classes in elementary or high school." "Boring" was the single most frequent description. Instruction lacked verve not because of projects, oral histories, simulations, or any of the other "progressive" ideas drubbed in print by E. D. Hirsch and others, but because of what the historian of pedagogy Larry Cuban has called "persistent instruction"--a single teacher standing in front of a group of 25-40 students, talking. A sixty-four-year-old Floridian described it this way: "The teacher would call out a certain date and then we would have to stand at attention and say what the date was. I hated it."31 Textbooks dominate instruction in today's high school history classes. These thousand-plus-page behemoths are written to satisfy innumerable interest groups, packing in so much detail that all but the most ardent become daunted.32 Many social studies teachers are forced to rely on the books because they lack adequate subject matter knowledge. Drawing on data from the National Center for Educational Statistics, Ravitch has shown that among those who teach history at the middle and secondary levels, only 18.5 percent possess a major (or minor!) in the discipline. Thus over 80 percent of today's history teachers did not study it in depth in college. While states have tripped over each other to beef up content standards for students, they have left untouched the minimalist requirements for those who teach history. The places that train teachers, colleges of education, are held hostage by accreditation agencies such as the National Council for Accreditation of Teacher Education ( NCATE ), which promote every cause, but not the stance that teachers should possess deep knowledge of their subject matter. Although federal efforts such as the Teaching American History program help individual teachers (and provide a boondoggle to many in the profession, including this author), at a policy level they are a colossal waste of money. One does not repair a rickety house by commissioning a paint job--one brings in a backhoe and starts digging up the foundation. Helping veteran teachers develop new subject matter knowledge for two weeks in the summer is a worthy cause, but not one likely to have any lasting impact. Change will occur only by intervening at the policy level to ensure that those who teach history know it themselves. Until that happens, we should expect no miracles.33 What should we do while we are waiting for the revolution? First, we should admit that we cannot have our cake and eat it too. We cannot insist that every student know when World War II began and who our allies were while giving tests that ask about the battles of Saratoga and Oriskany. Today's standards documents, written to satisfy special interest groups and out-of-touch antiquarians, are a farce. When the majority of kids leaving school cannot date the Civil War and are confused about whether the Korean War predated or followed World War II, how far do we want to go in insisting that seventeen-year-olds know about the battle at Fort Wagner, Younghill Kang's East Goes West, Carrie Chapman Catt, Ludwig von Mises, and West Virginia State Board of Education v. Barnette (Massachusetts State History Standards); John Hartranft (Pennsylvania State History Standards); Henry Bessemer, Dwight Moody, Hiram Johnson, the Palmer raids, the 442nd Regiment Combat Team, Federalist Number 78, and Adarand Constructors, Inc. v. Peña (California History-Social Science Standards, grades 11 and 12); the policy of Bartolomé de Las Casas toward Indians in South America, Charles Bulfinch, Patience Wright, Charles Willson Peale, and the economic effects of the Townshend Acts ( NAEP standards)? As William Cronon remarked after examining another pie-in-the-sky scheme, the ill-fated National History Standards, he would have been ecstatic if his University of Wisconsin graduate students had known half of this stuff. Or, in words that today's teenagers might use, let's get real.34 None of the Above The dilemmas we face today in assessing young people's knowledge differ little from those confronted by J. Carleton Bell and David F. McCollum in 1917. Few historians would argue that large-scale objective tests capture the range of meanings we attribute to the "historic sense." We use these tests and will do so in the future, not because they are historically sound or because they predict future engagement with historical study, but because they can be read by machines that produce easy-to-read graphs and bar charts. The tests comfort us with the illusion of systematicity--not to mention that scoring them costs a lot less than the alternatives. Psychologists define a crazy person as someone who keeps doing the same thing but expects a different result. As long as textbooks dominate instruction, as long as the ETS dictates the history American children should know, as long as states continue to play a "mine-is-bigger-than-yours" standards game for students while hiding from view content-free teacher standards, as long as historians roll over and play dead in front of number-wielding psychometricians, we can have all the blue-ribbon commissions in the world but the results will be the same. Technology may have changed since 1917, but the capacity of the human mind to retain information has not. Students could master and retain the piles of information contained in 1917 or 1943 textbooks no better than they can retain what fills today's gargantuan tomes. Light rail excursions through mounds of factual information may be entertaining, but such dizzying tours leave few traces in memory. The mind demands pattern and form, and both are built up slowly and require repeated passes, with each pass going deeper and probing further.35 If we want young people to know more history, we need to draw on a concept from medicine: triage. As the University of Tennessee's Wilfred McClay explains,

Mechanical testing tempts us with the false promise of efficiency, a lure that whispers that there is an easier, less costly, more scientific way. But the truth is that the blackening of circles prepares us only to blacken more circles in the future. The sooner we realize this, the sooner we will be redeemed from our craziness. Sam Wineburg is professor of education at Stanford University and was previously professor of cognitive studies in education and adjunct professor of history at the University of Washington . I wish to acknowledge Gary Kornblith for his counsel and patience during the preparation of this article and Shoshana Wineburg and Simone Schweber for their keen editorial acumen. Cathy Taylor provided essential technical advice and saved me from error. I thank them all. Readers may contact Wineburg at <wineburg@stanford.edu>. 1 J. Carleton Bell , "The Historic Sense," Journal of Educational Psychology, 8 (May 1917), 317-18. 2 Ibid. 3 J. Carleton Bell and David F. McCollum, "A Study of the Attainments of Pupils in United States History," Journal of Educational Psychology, 8 (May 1917), 257-74, esp. 257-58. 4 Ibid., 258. 5 Ibid., 268-69. Five years later the Bell and McCollum survey was replicated, though on a much smaller scale. See D. H. Eikenberry, "Permanence of High School Learning," Journal of Educational Psychology, 14 (Nov. 1923), 463-81. See also Garry C. Meyers, "Delayed Recall in History," ibid., 8 (May 1917), 275-83. 6 New York Times, April 4, 1943, p. 1; Allan Nevins, "American History for Americans," New York Times Magazine, May 3, 1942, pp. 6, 28. The Times survey followed an earlier exposé on the scarcity of required courses in American history at the college level. See New York Times, June 21, 1942, p. 1. For the editorial, see ibid. , April 4, 1943, p. 32. On how the general media reported this survey, see Richard J. Paxton, "Don't Know Much about History--Never Did," Phi Delta Kappan, 85 (Dec. 2003), 264-73. 7 New York Times, May 2, 1976, pp. 1, 65. 8 Diane Ravitch and Chester E. Finn, What Do Our Seventeen-Year-Olds Know? A Report on the First National Assessment of History and Literature (New York, 1987); Educational Testing Service, National Center for Education Statistics, NAEP 1994 U.S. History Report Card: Findings from the National Assessment of Educational Progress (Washington, 1996) <http://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=96085> (Nov. 24, 2003). Compare Edgar B. Wesley, American History in Schools and Colleges (New York, 1944). At the height of Cold War anxieties, McCall's commissioned a survey of college graduates' knowledge of the Soviet Union . Over a quarter could not name Moscow as the capital, and nearly 80% were unable to name a single Russian author. Harrison E. Salisbury, "What Americans Don't Know about Russia ," McCall's Magazine, 84 (June 1957), 40-41. 9 USA Today, May 10-12, 2002, p. 1; Greensboro [North Carolina] News and Record , May 13, 2002, p. A8; Lee Bockhorn, "History in Crisis," Weekly Standard, May 13, 2002, available at LexisNexis Academic. Diane Ravitch quoted in the Palm Beach [ Florida ] Post, May 10, 2002, p. 13a. 10 Michael A. Fletcher, "Students' History Knowledge Lacking, Test Finds," Washington Post , May 9, 2002 <http://www.washingtonpost.com/ac2/wp-dyn/A60096-2002May9?language=printer/> (Dec. 4, 2003). For the quotations from National Assessment of Educational Progress (NAEP) officials, see USA Today, May 10-12, 2002, p. 1. Rod Paige quoted in David Darlington, "U.S. Department of Education Releases Results of Latest U.S. History Test," Perspectives Online, Summer 2002 <http://www.theaha.org/perspectives/issues/2002/Summer/naep.cfm> (Nov. 24, 2003). In the 2001 administration of NAEP , scores on the fourth grade test rose four points from 1994, and on the eighth grade test three points (from 259 to 262 out of 500 total). Twelfth graders' scores remained stagnant. The NAEP examination in history is not solely multiple-choice but includes some "constructed-response" (short answer) questions. Sample questions are available at <http://nces.ed.gov/nationsreportcard/ITMRLS/pickone.asp> (Dec. 4, 2003). 11 Students did surprisingly well on the gold standard item, with 56% answering it correctly. See items for the 2001 history NAEP at the user-friendly Web site of the National Center for Educational Statistics <http://nces.ed.gov/nationsreportcard/> (Dec. 4, 2003). 12 Albert Shanker Institute, "Education for Democracy" (2003) <http://www.ashankerinst.org/Downloads/EfD%20final.pdf> (Nov. 13, 2003), pp. 6, 7. Emphasis added. 13 History achieved a stronger position in the curriculum in the first two decades of the twentieth century than at any other time in American history. "By 1900," a historian of education wrote, "history . . . received more time and attention in both elementary and secondary schools than all the other social studies combined." History dominated from about 1890 to 1920, although its apex was probably about 1915. Edgar B. Wesley, " History in the School Curriculum," Mississippi Valley Historical Review, 29 (March 1943), 567. 14 On the school curriculum in this period, see Hazel W. Hertzberg, "History and Progressivism: A Century of Reform Proposals," in Historical Literacy: The Case for History in American Education, ed. Paul Gagnon and the Bradley Commission on History in Schools (New York, 1989), 69-102. Bell and McCollum, "Study of the Attainments of Pupils in United States History," 268. 15 The date for the first statement is May 9, 2002; see Associated Press Online, " HS Seniors Do Poorly on History Test," available at LexisNexis Academic. The second is from Diane Ravitch, Capitol Hill Hearing Testimony, April 10, 2003, LexisNexis Academic (Sept. 6, 2003). 16 Nevins, "American History for Americans," 6. 17 E. D. Hirsch cited in Chester E. Finn and Diane Ravitch, "Survey Results: U.S. Seventeen-Year-Olds Know Shockingly Little about History and Literature," American School Board Journal, 174 (Oct. 1987), 32; Rod Paige quoted in "Students and U.S. Secretary of Education Present a Solution for U.S. Historical Illiteracy," June 8, 2002, Ascribe Newswire, available at LexisNexis Academic. For the adjective "shameful," see Ravitch and Finn, What Do Our Seventeen-Year-Olds Know?, 201. On the early volleys of what came to be known as the "history wars," see Arthur Zilversmit, "Another Report Card, Another 'F,'" Reviews in American History, 16 (June 1988), 314-20; and Sam Wineburg, Historical Thinking and Other Unnatural Acts: Charting the Future of Teaching the Past (Philadelphia, 2001), esp. 3-27. For manifestations of this neurosis in other national contexts, see Jack Granatstein, Who Killed Canadian History? (Toronto, 1998); and Yoram Bronowski, "A People without History," Haaretz (Tel Aviv), Jan. 1, 2000. 18 Similar test results mean, not that what students know today is identical to what they knew in the past, but "that each group performed about the same on the particular set of test questions designed for them to take. This observation is buttressed by the comparison of the distributions of item difficulty. The shape and location of these distributions for tests covering a span of 42 years are strikingly similar." Dale Whittington, "What Have Seven-teen-Year-Olds Known in the Past?," American Educational Research Journal, 28 (Winter 1991), 759-80. 19 The origins of multiple-choice testing go back to the first mass-administered examination in American history, the Army Alpha and Beta, during World War I. See Daniel J. Kevles, "Testing the Army's Intelligence: Psychologists and the Military in World War I," Journal of American History, 55 (Dec. 1968), 565-81; Franz Samelson , "World War I Intelligence Testing and the Development of Psychology," Journal of the History of the Behavioral Sciences, 13 (July, 1977), 274-82; and John Rury, "Race, Region, and Education: An Analysis of Black and White Scores on the 1917 Army Alpha Test," Journal of Negro Education, 57 (Winter 1988), 51-65 . For critical and nontechnical overviews of modern testing, see Stephen Jay Gould, The Mismeasure of Man (New York, 1981); Banesh Hoffmann, The Tyranny of Testing (New York, 1962); Leon J. Kamin, The Science and Politics of IQ (Potomac, 1974); and Paul L. Houts, ed., The Myth of Measurability (New York, 1977). A evenhanded assessment from a major figure in modern psychometrics is Lee J. Cronbach, "Five Decades of Public Controversy over Mental Testing," American Psychologist, 30 (Jan. 1975), 1-14. 20 Cooperative Test Service of the American Council on Education, The Cooperative Achievement Tests: A Handbook Describing their Purpose, Content, and Interpretation (New York, 1936), 6. 21 For Garrison Keillor's famous phrase, see "Registered Trademarks and Service Marks" <http://www.prairiehome.org/content/trademarks.html> (Dec. 4, 2003). 22 Even professional historians do poorly when staring down items outside their research specializations. When historians trained at Berkeley, Harvard, and Stanford universities answered questions from a leading high school textbook, they scored a mere 35%--in some cases lower than a comparison group of high school students taking Advanced Placement U.S. history courses. See Samuel S. Wineburg, "Historical Problem Solving: A Study of the Cognitive Processes Used in the Evaluation of Documentary and Pictorial Evidence," Journal of Educational Psychology, 83 (no. 1, 1991), 73-87. 23 For non-multiple-choice items, the functional equivalent is the item-test correlation. Biserial or item-test correlations range from -1.00 to +1.00, with -1.00 being a score for a completely ineffective test item and +1.00 one for a perfect item. A +1.00 correlation would be achieved if all students in the highest scoring group got a particular item correct and all students in the lower scoring group got it incorrect (conversely, for a perfect negative correlation). Most multiple-choice items on large-scale tests have biserial correlations that range from +.25 to +.50. 24 For recent updates on bias in large-scale achievement testing, see Roy O. Freedle, "Correcting the SAT 's Ethnic and Social-Class Bias: A Method for Reestimating SAT Scores," Harvard Educational Review, 73 (Spring 2003), 1-43. For nontechnical overviews of Freedle's argument, see Jeffrey R. Young, "Researchers Charge Racial Bias on the SAT ," Chronicle of Higher Education, Oct. 10, 2003, p. A34; and Jay Matthews, "The Bias Question," Atlantic Monthly, 292 (Nov. 2003), 130-40. 25 Perfect normal curves are extremely rare in nature and typically result from experiments in probability, such as tossing a thousand quarters in the air over and over, each time plotting the number of heads; as the number of tosses approaches infinity, the curve becomes more and more symmetrical. Only by fixing the results beforehand can something as diffuse as historical ability fall into such even and well-shaped patterns. The best critique of the normal curve is by a physicist; see Philip Morrison, "The Bell Shaped Pitfall," in Myth of Measurability, ed. Houts, 82-89. See also Irving M. Klotz, "Of Bell Curves, Gout, and Genius," Phi Delta Kappan, 77 (Dec. 1995), 279-80. 26 Whittington, "What Have Seventeen-Year-Olds Known in the Past?," 778 . The use of biserials (and their equivalents, now employed with Item Response Theory methods) is predicated on the unidimensionality of the "construct" being tested. That is, "historical knowledge" is considered a single entity, not a woolly construct composed of different factors and influences in the spirit of J. Carleton Bell's 1917 formulation. Typical of psychometric reasoning is the following statement: "Items that correlate less than .15 with the total test score should probably be restructured. One's best guess is that such items do not measure the same skill or ability as does the test on the whole. . . . Generally, a test is better (i.e., more reliable) the more homogeneous the items." Jerard Kehoe, "Basic Item Analysis for Multiple-Choice Tests, Practical Assessment, Research, and Evaluation, 4 (1995) <http://pareonline.net/getvn.asp?v=4&n=10/> (Jan. 13, 2004). 27 As the Cooperative Test Service explained to teachers in 1936, "The purpose of the test is to discover differences between individuals, and this must also be the purpose of each item in the test. Items that all students can answer will obviously not help to discover such differences and therefore the test should contain very few such items." Cooperative Test Service, Cooperative Achievement Tests, 6. 28 The first question is from the 1987 exam, the second from 2001. E. D. Hirsch, Cultural Literacy: What Every American Needs to Know Boston , 1987). 29 Sean Wilentz, "The Past Is Not a Process," New York Times, April 20, 1996, p. E15. Wilentz predicted that the "historical illiteracy of today's student will only worsen in the generation to come," without referring to similar baleful predictions from 1917, 1943, 1976, or 1987. Ibid. Ravitch and Finn, What Do Our Seventeen-Year-Olds Know?, 194. 30 Maurice G. Baxter, Robert H. Ferrell, and John E. Wiltz, The Teaching of American History in High Schools (Bloomington, 1964); John I. Goodlad, A Place Called School (New York, 1984), esp. 212. See also James Howard and Thomas Mendenhall, Making History Come Alive (Washington , 1982); and Karen B. Wiley and Jeanne Race, The Status of Pre-College Science, Mathematics, and Social Science Education: 1955-1975, vol. III: Social Science Education (Boulder, 1977). 31 Charles Silberman, Crisis in the Classroom: The Remaking of American Education (New York, 1970), 172; Larry Cuban, "Persistent Instruction: The High School Classroom, 1900-1980," Phi Delta Kappan, 64 (Oct. 1982), 113-18; Larry Cuban, How Teachers Taught: Constancy and Change in American Classrooms, 1890-1980 (New York, 1993); Roy Rosenzweig, "How Americans Use and Think about the Past: Implications from a National Survey for the Teaching of History," in Knowing, Teaching, and Learning History: National and International Perspectives, ed. Peter N. Stearns, Peter Seixas, and Sam Wineburg (New York, 2000), 275. The full survey is reported in Roy Rosenzweig and David Thelen, The Presence of the Past: Popular Uses of History in American Life (New York, 1998). 32 Textbook wars are often portrayed as political battles between Left and Right, but they also manifest internecine struggles within the Left. See the account of the Oakland , California , textbook adoption process in Todd Gitlin, The Twilight of Common Dreams: Why America Is Wracked by Culture Wars (New York, 1995). On textbooks, see Harriet Tyson Bernstein, A Conspiracy of Good Intentions: America's Textbook Fiasco (Washington, 1988); Frances FitzGerald, America Revised (New York, 1979); Gary B. Nash, Charlotte Crabtree, and Ross E. Dunn, History on Trial: Culture Wars and the Teaching of the Past (New York, 1997); Diane Ravitch, The Language Police (New York, 2003); and Jonathan Zimmerman, Whose America? Culture Wars in the Public Schools ( Cambridge , Mass. , 2002). On the readability of textbooks (or lack thereof), see Isabel L. Beck, Margaret G. McKeown, and E. W. Gromoll, "Learning from Social Studies Texts," Cognition and Instruction, 6 (no. 2, 1989), 99-158; and Richard J. Paxton, "A Deafening Silence: History Textbooks and the Students Who Read Them," Review of Educational Research, 69 (Fall 1999), 315-39. 33 Diane Ravitch, "The Educational Backgrounds of History Teachers," in Knowing, Teaching, and Learning History, ed. Stearns, Seixas, and Wineburg, 143-55. Compare today's efforts at improving history instruction with the last federal infusion of millions into the history and social studies curriculum. An extensive evaluation of the 1960s curriculum projects found that by the mid-1970s, the effects of the "new social studies" had largely vanished from public schools. See Wiley and Race, Status of Pre-College Science, Mathematics, and Social Science Education, III; and Peter Dow, Schoolhouse Politics: Lessons from the Sputnik Era (Cambridge, Mass., 1991). On historians' involvement with the new social studies, see the statement by its leader, a Carnegie Mellon University historian: Edwin Fenton, The New Social Studies (New York, 1967). The jewel of the crown of these efforts was the Amherst History Project, whose curriculum materials remain in print. Richard H. Brown, the force behind the project, provided an early analysis of problems with its conceptualization and a requiem for it. See Richard H. Brown, "History as Discovery: An Interim Report on the Amherst Project," in Teaching the New Social Studies in Secondary Schools: An Inductive Approach, ed. Edwin Fenton (Boston, 1966), 443-51; and Richard H. Brown, "Learning How to Learn: The Amherst Project and History Education in the Schools," Social Studies, 87 (Nov.- Dec. 1996), 267-73. 34 See "Massachusetts Curricular Frameworks" <http://www.doe.mass.edu/frameworks/current.html> (Dec. 4, 2003); "Pa. History Is Put to the Test," Philadelphia Inquirer, June 4, 2003, available at LexisNexis Academic; "History-Social Science Framework for California Public Schools, Kindergarten through Grade Twelve" <http://www.cde.ca.gov/cdepress/hist-social-sci-frame.pdf> (Dec. 4, 2003); and "U.S. History Framework for the 1994 and 2001 National Assessment of Educational Progress" <http://www.nagb.org/pubs/hframework2001.pdf> (Dec. 4, 2003). William Cronon, "History Forum: Teaching American History," American Scholar, 67 (Winter 1998), 91. 35 On contemporary learning theory, see a consensus report: U.S. National Research Council, How People Learn: Brain, Mind, Experience, and School ( Washington , 1999), available online at <http://books.nap.edu/books/0309065577/html/index.html> (Dec. 17, 2003). The volume echoes statements first enunciated in Jerome Bruner, The Process of Education (Cambridge, Mass., 1960). |